PROJECT NAME :: Trash Cyclone

DESCRIPTION :: Trash Cyclone aimed to bring awareness on how much trash we create in our daily life. By using the Tango's Motion Tracking feature to mark the user as the center of the universe with one main gravitational force, the user as the attractor will attract all the trash in the app. The user has to move / run away from these trash. Otherwise, the user's view will slowly become dark and die.

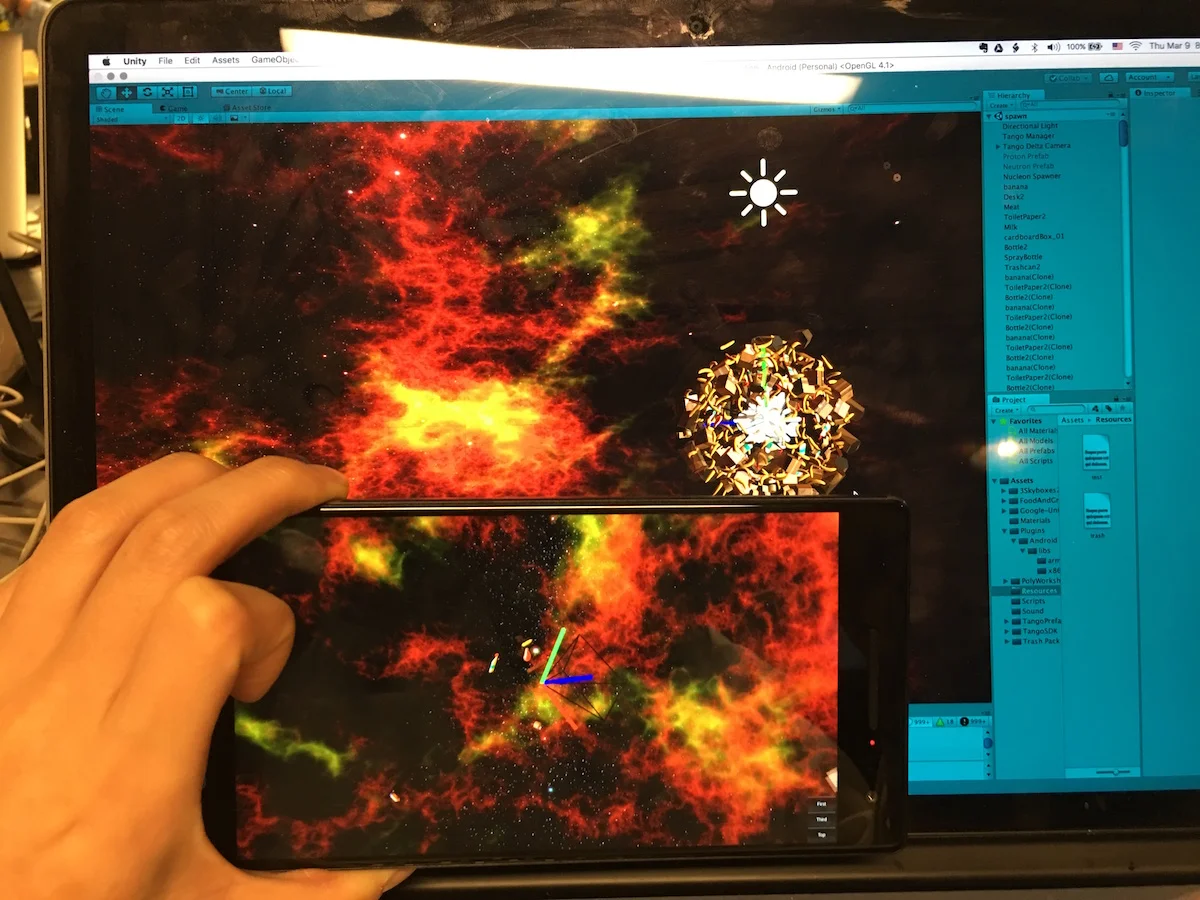

BACKGROUND :: SKYBOX

The skybox feature in Unity is amazing!!!! I loveeeee it!!! I cannot beleive how seamless each planes, top, right, left, bottom, and back are connected. There are no edges!

Here, I use The Tango AR Delta Camera. The Delta Camera let me see my app in First Person view, Third Person view and Top view.

BACKGROUND :: REAL WORLD

Now I switched to use The Tango AR Camera feature to see the real world as the background. The Tango AR camera only has a First Person view, it doesn't have the three views mode like the Delta camera has. (First Person view, Third Person view and Top view.)

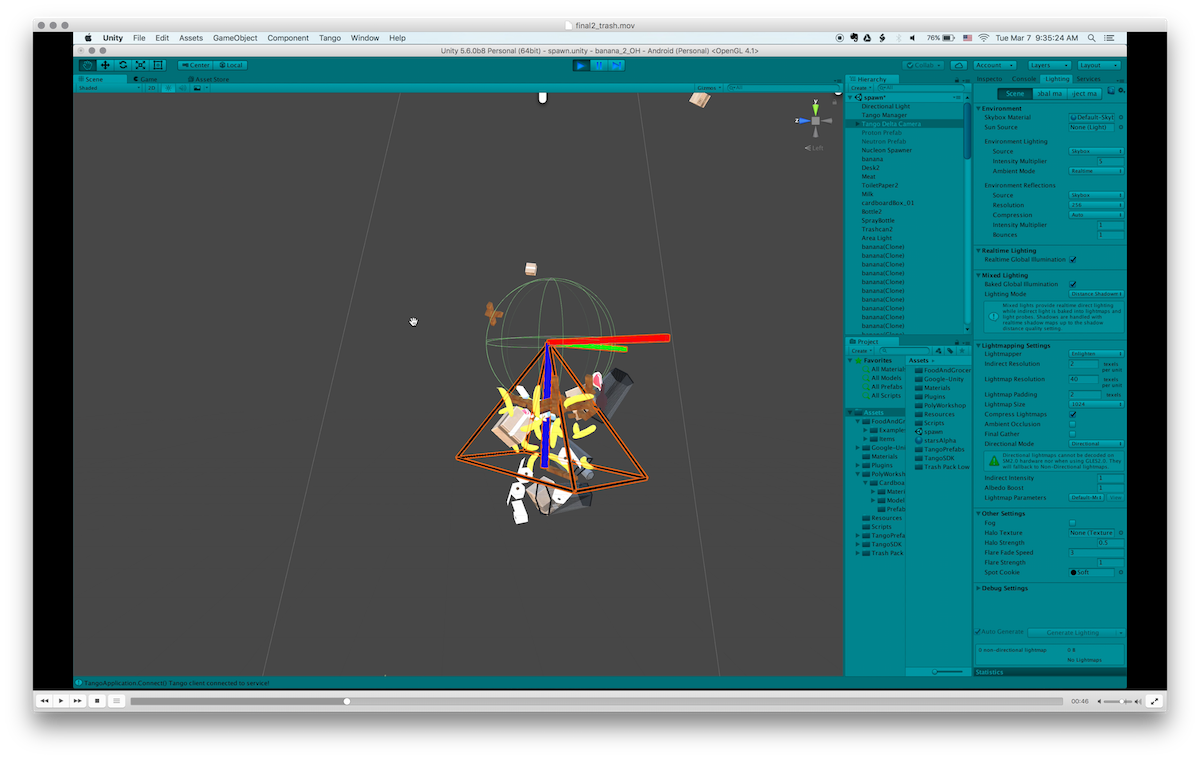

RIGID BODY :: FORCE :: GRAVITY

Rigid Body, Force and Gravity in unity is an amazing tool once I get it working! It took me a while to understand(still not totally) unity's terminologies and its techniques. I really like how I have the control to simulate earth's gravity or I can make my app exist in zero gravitational world, just like in space!

The attractor (spawners, trash prefabs: bananas, boxes, etc) cannot have any gravity, it has to be turned off and only the camera's gravity turned on. otherwise they would all fall and gather at the bottom of the sphere.

The camera has to be linked to each of the prefabs trash. Otherwise, they would not follow the camera and will not attract to the user as the user move in physical world.

BUGS?? GLITCHES??

During my app development process, I found that there was a bug in code because when I build my app from Unity to Tango, I could see my trash prefabs are being created on my laptop (Unity) but not on the android phone (Tango).

WHAT'S NEXT?

ADDITIONAL FEATURE :: VIBRATION :: SOUND

I really want to include vibration feature into my app. I imagined that each prefab has vibration and sound mode. And when each of the prefabs are attracted to the center (me / camera), the phone would vibrate and make some noise when each of the prefabs hit me. The user experience is going to be much more realistic. As Rui has suggested I tried using a customized Android Vibration Plugin from Unity 3D Asset Store for only $3. At the end I didn't use this feature because I think I did not add vibration permissions on the android manifest file correctly because I couldn't build my app at the end and keep getting 'Build Failure' error. I'll try and add this in afterwards.

Android Vibration Plugin

Unity Normal Setting Vibration (1 sec)

Final Thoughts..... If I ever take this project further, I think that I want to develop an app that bring awareness on how much trash each of us create each day, month, year or our entire life and have these trash follow each user through the app. Also, instead of using the the unity's 3D model, I would use a special 3D camera to scan actual trash object and import into unity to get a more realistic look.